3 minutes

The Role of AI assurance in AI governance

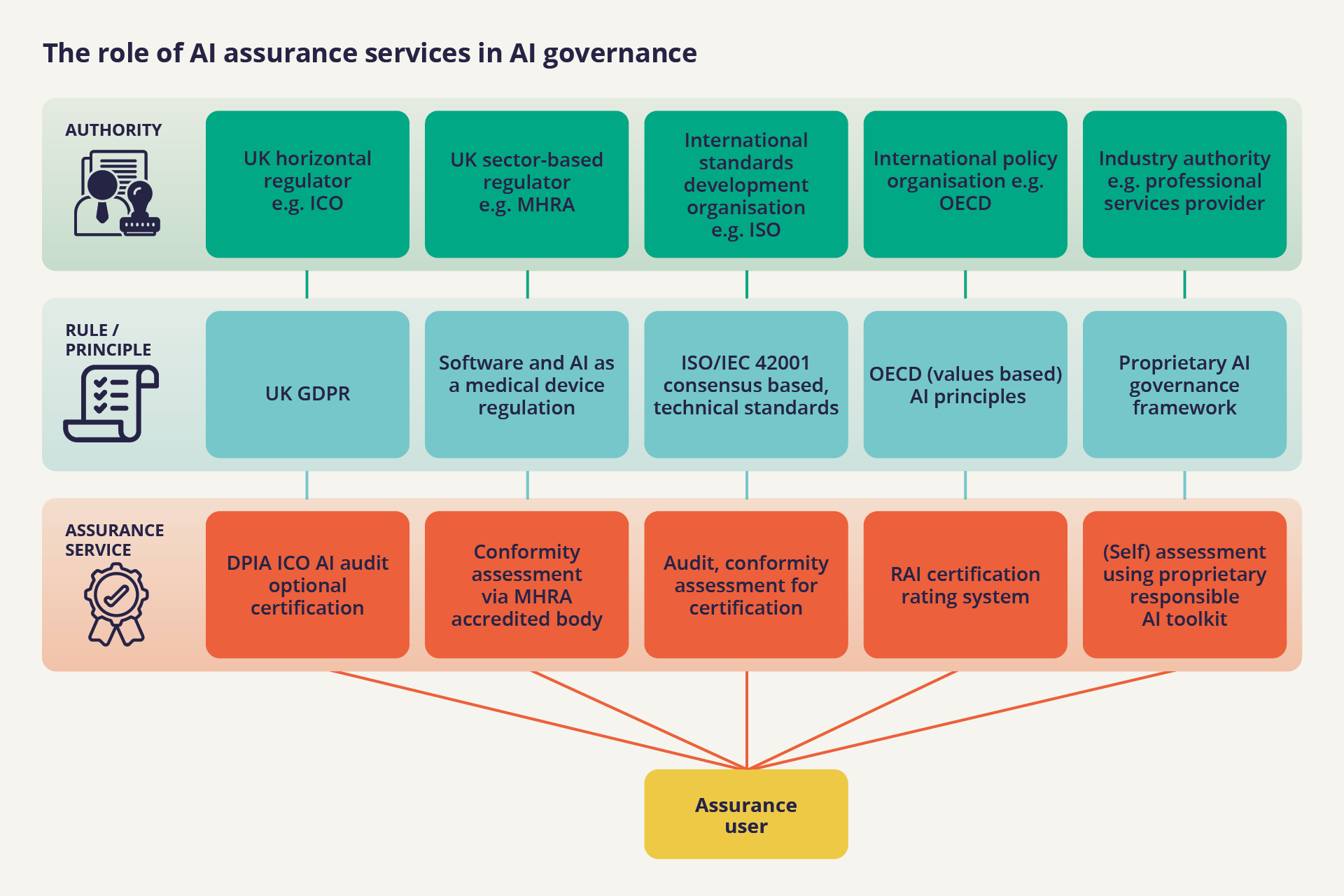

AI assurance services are a distinctive and important aspect of broader AI governance. AI governance covers all the means by which the development, use, outputs and impacts of AI can be shaped, influenced and controlled, whether by the government or by those who design, develop, deploy, buy or use these technologies. AI governance includes regulation but also tools like assurance and standards and statements of principles and practice, often referred to as AI ethics.

Regulation, standards and other statements of principles and practice define what trustworthy AI looks like. Alongside this, AI assurance services provide the ‘infrastructure’ for

An AI assurance ecosystem can offer an agile

AI assurance could play a crucial role in a regulatory environment by providing a toolbox of mechanisms and processes to monitor regulatory compliance as well as the development of common practice beyond statutory requirements to which organisations can be held accountable.

Compliance with regulation

AI assurance mechanisms

- Implementation and elaboration of rules for the use of AI systems in specific circumstances.

- Translating rules into practical forms useful for end users and evaluating alternative models of implementation.

- Providing technical expertise and capacity to assess regulatory compliance across the system lifecycle.

- Assurance mechanisms can also be used to facilitate assessment against designated technical standards that can provide a presumption of conformity with essential legal requirements. For example, the EU’s AI act states that ‘compliance with standards…should be a means for providers to demonstrate conformity with the requirements of this Regulation.’

A market of AI assurance services supported by public sector assurance services such as conformity assessment bodies, address critical challenges for ensuring that AI development is safe and beneficial.

Managing risk and building trust

Assurance services also enable stakeholders to ensure compliance with norms and principles of responsible innovation, beyond regulatory compliance. Assurance tools can be effective as

Post-compliance assurance is particularly useful in the AI context where the complexity of AI systems can make it very challenging to craft meaningful regulation for them. Assurance services offer means to describe, measure, and assign responsibility for AI systems’ impacts, risks and performance without the need to

LICENSE ATTRIBUTION

License: Open Government License v3.0

Created By: Centre for Data Ethics and Innovation (CDEI)

Source Title and Location: What is the role of AI assurance in AI governance?