5 minutes

Needs and Responsibilities for AI Assurance

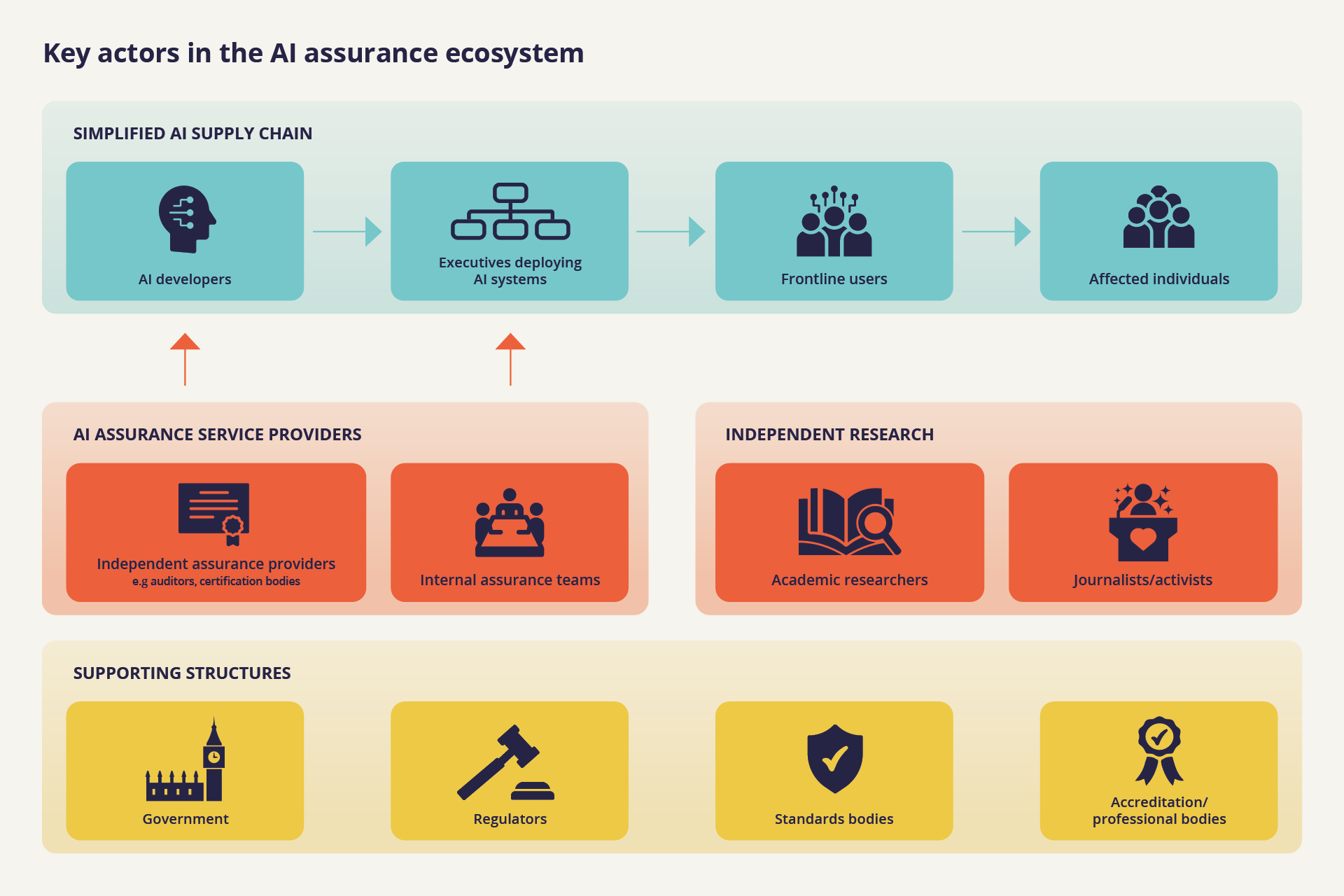

A range of actors including regulators, developers, executives, and frontline users, need to check that AI systems are trustworthy and compliant with regulation, and to demonstrate this to others. However, these actors often have limited information, or lack the appropriate specialist knowledge to check and verify others’ claims about this.

In the diagram below we have categorised three important groups of actors who will benefit from the development of an AI assurance ecosystem: The AI supply chain, AI assurance service providers and, supporting structures for AI assurance.

The efforts of different actors in this space are both interdependent and complimentary. Building a mature assurance ecosystem will therefore require active and coordinated effort. The actors specified in the are not meant to be exhaustive, but represent the key roles in the emerging AI assurance ecosystem.

This simplified diagram highlights the main roles of these four important groups of actors in the AI assurance ecosystem. However it is important to note that while the primary goal of the ‘supporting structures’ is to set out criteria for trustworthy AI through regulation, technical standards or guidance, these actors can also provide assurance services via advisory, audit and certification functions e.g. the

These actors can each play a number of interdependent roles within an assurance ecosystem. The table below illustrates each actor’s role in demonstrating the trustworthiness of AI systems and their own requirements for building trust in AI systems.

AI supply chain

| Assurance user | Role in demonstrating trustworthiness | Requirements for building trust |

|---|---|---|

| Developer | need (1) to ensure their development is both compliant with applicable regulations and guidelines and (2) to communicate their AI system’s compliance and hence trustworthiness to regulators, executives, frontline users and affected individuals. This will require agreed rules for the levels of transparency and information sharing required to demonstrate compliance. | Need clear standards, regulations and practice guidelines in place so that they can have trust that the technologies they develop will be compliant and they will not be liable. |

| Executive | buying and/or deploying AI need (1) to ensure and demonstrate that the AI systems they buy and deploy meet applicable legal, ethical and technical standards (which are important for international interoperability) (2) to assess and manage the Environmental, Social and Corporate Governance (ESG) risks of AI systems, and in some cases decide whether or not to adopt them at all (3) to communicate their compliance and risk management to regulators, boards, frontline users and affected individuals. | Require clear and reliable evidence from developers and/or vendors that the AI systems they buy and deploy meet applicable ethical, legal and technical standards to gain enough confidence to deploy these systems. |

| Frontline users | Need to communicate the AI system’s compliance with applicable regulations and guidelines to affected individuals to demonstrate trustworthiness and enable them to have confidence in the use of technology and to exercise their rights. | Individuals who use AI systems and their outputs to support their activities need (1) to know enough about an AI system’s compliance to have confidence using it. |

| Affected individual | N/A | A citizen, consumer or employee, need to understand enough about the use of AI systems in decisions affecting them, or being used in their presence (e.g. Facial recognition technologies), to exercise their rights. |

Assurance service providers

| Assurance user | Role in demonstrating trustworthiness | Requirements for building trust |

|---|---|---|

| Independent assurance provider | Need to collect evidence, assess and evaluate AI systems and their use. Agreed standards are required to enable independent assurance providers to communicate evidence in ways that are understood, agreed on and trustworthy by their customers. | Require clear, agreed standards as well as reliable evidence from developers/vendors/executives about AI systems to have confidence/trust that the assurance they provide is valid and appropriate for a particular use-case. |

| Research bodies | who want to contribute research on potential risks or develop potential assurance tools. | Need appropriate access to Industry systems, documentation and information to be properly informed about the systems for which they are developing assurance tools/techniques. |

| Journalists/activists | who can provide publicly available information and scrutiny to keep affected individuals informed. | Require rules/laws around transparency and information disclosure to have confidence that systems are trustworthy and if they are not trustworthy, are open to scrutiny. |

Supporting structures

| Assurance user | Role in demonstrating trustworthiness | Requirements for building trust |

|---|---|---|

| Governments | that want to encourage responsible adoption of AI systems need to help shape an ecosystem of assurance services tools to have confidence that AI will be deployed responsibly, in compliance with laws and regulations, and in a way that does not unduly hinder economic growth. | Require confidence that AI systems being developed and deployed in their jurisdiction will be compliant with applicable laws and regulation and will respect citizens rights and freedoms, to trust that AI systems will not cause harm and will not damage the government’s reputation. |

| Regulators | who see increasing use of AI systems in their scope need to encourage, test and confirm that AI systems are compliant with their regulations, incentivise best practice and create the conditions for the trustworthy development and use of AI. | Require confidence that the use of AI systems that fall within their regulatory scope are trustworthy, compliant with regulation and guidelines of best practice. To enable this, regulators need confidence that they have the appropriate regulatory mandate and the appropriate skills and resources. |

| Standards bodies | Need to convene actors including industry and academia to develop commonly accepted standards that can be evaluated or tested against and encourage adoption of existing standards, to demonstrate (1) compliance with laws, regulations (2) other norms or principles, and to set best practices for addressing risks and establishing common language to improve safety and trust. | Require confidence that standards accepted by AI developers, users and affected parties and that they are appropriate to ensure trustworthy AI where they are being used. |

| Accreditation body | Need to recognise and accredit trustworthy independent assurance providers to build trust in auditors, assessors and certifiers throughout the ecosystem. | Need accredited bodies or individuals to provide reliable evidence of good practice. |

| Professional body | Need to set and enforce professional standards of good practice and ethics, which can be important both for developers and assurance service providers. | Need to maintain oversight of the knowledge, skills, conduct and practice for AI assurance |

LICENSE ATTRIBUTION

License: Open Government License v3.0

Created By: Centre for Data Ethics and Innovation (CDEI)

Source Title and Location: Needs and Responsibilities for AI Assurance?